This is the Publishing Spark UIs on Kubernetes (Part 3) from article series (see Part 1 and Part 2).

In this article we go through the process of publishing Spark Master, workers and driver UIs in our Kubernetes setup.

Publishing Master UI

To publish Spark Master UI, we need to define a LoadBalancer type of service in Kubernetes manifest:

apiVersion: v1

kind: Service

metadata:

name: spark-master-expose

labels:

app: spark

role: master

spec:

selector:

app: spark

role: master

type: LoadBalancer

ports:

- port: 8080

targetPort: 8080

protocol: TCP

name: http

Since the name spark-master for service is already taken by our ClusterIP service, we had chosen a different name with -expose suffix.

After applying this change and refreshing the services, we should see an exposed port on our minikube cluster:

> minikube service list

|-------------|---------------------|--------------|-------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|-------------|---------------------|--------------|-------------------------|

| default | kubernetes | No node port |

| default | spark-master | No node port |

| default | spark-master-expose | http/8080 | http://172.17.0.2:32473 |

| kube-system | kube-dns | No node port |

|-------------|---------------------|--------------|-------------------------|

Exposing Workers and Driver

Exposing workers might be a little bit tricky.

The number of them can change, some of them mail fail from time to time.

Creating a separate Kubernetes service for each of them would be tedious (although with Helm, probably possible).

Fortunately, there is a feature called spark.ui.reverseProxy.

This has to be set on master, every worker and executor and also driver.

When set, master UI becomes a HTTP proxy to other components.

To put this setting into every component, we create conf/spark-defaults.conf with one line:

spark.ui.reverseProxy true

This file is loaded by every Spark component, thanks to specifying the classpath explicitly in startup scripts, for example worker.sh:

#!/bin/bash

env

export SPARK_PUBLIC_DNS=$(hostname -i)

java ${JAVA_OPTS} \

-cp "${SPARK_HOME}/conf:${SPARK_HOME}/jars/*" \ # <<< see the classpath

org.apache.spark.deploy.worker.Worker \

--port 7078 --webui-port 8080 \

${SPARK_MASTER_HOST}:${SPARK_MASTER_PORT}

You can use this file to put any Spark configuration needed by your setup.

After building and applying the manifest:

kubectl apply -f manifest.yml

We inspect published service URL from minikube:

> minikube service list

|-------------|---------------------|--------------|-------------------------|

| NAMESPACE | NAME | TARGET PORT | URL |

|-------------|---------------------|--------------|-------------------------|

| default | kubernetes | No node port |

| default | spark-master | No node port |

| default | spark-master-expose | http/8080 | http://172.17.0.2:32473 |

| kube-system | kube-dns | No node port |

|-------------|---------------------|--------------|-------------------------|

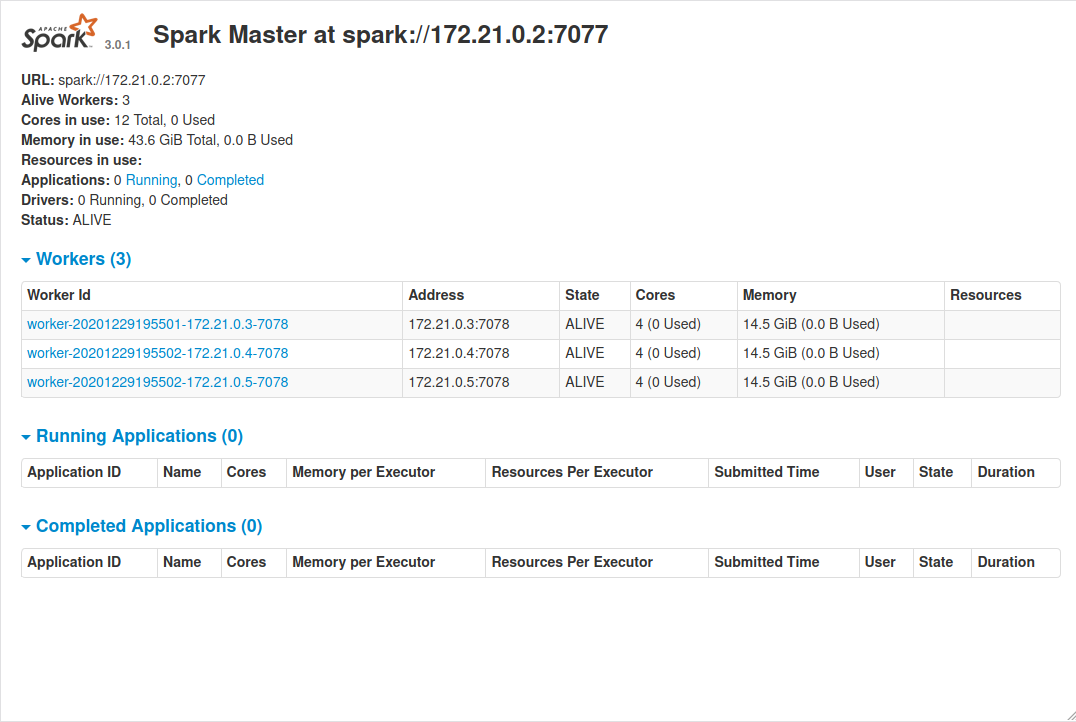

And browse to http://172.17.0.2:32473.

Conclusion

All the project files and roles are available in docker-spark github repository. Pre-built docker images available at hub.docker.com/r/michalklempa/spark.