While working with Avro Schemas,

one can quickly come to the point, where schema definitions for multiple entities start

to overlap and schema files grow in number of lines.

As with object oriented design of classes in your program, same principle could be applied

to design of your Avro schema collection.

Unfortunately Avro Schema Definitition language does not have a native require or import syntax.

One possible solution is to rewrite all the schemas into Avro Interface Definition language, which have the import feature (see [1]).

If you do not want to rewrite all the schemas or simply like the JSON schema definitions more, in this article we will introduce a mechanism how to:

- design small schema file units, containing Avro named types

- programatically compose the files into large Avro schemas, one file per one type

Article is accompanied with full example on usage and source code of the Avro Compose - automatic schema composition tool.

Avro Schema Composition

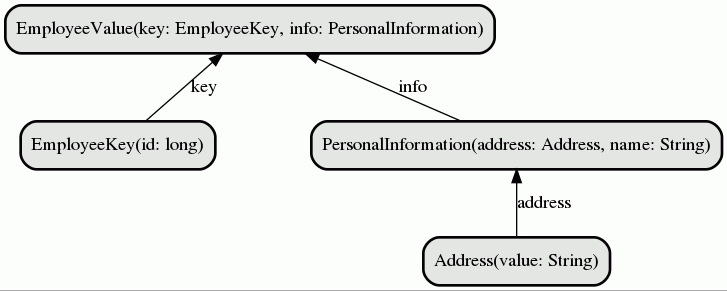

Suppose we have four entities to be composed into larger schemas:

- EmployeeKey

- EmployeeValue

- PersonalInformation

- Address

The composition is depicted in picture:

Entities are defined in 3 files:

- Employee.avsc which contains both EmployeeKey and EmployeeValue

- PersonalInformation.avsc

- Address.avsc

Lets inspect Employee.avsc, see where the type of PersonalInformation is only named, but without declaration:

{

"doc": "This is an example of composite Avro value prepared for Kafka topic value with subtype, reusing common subtypes, like Location and PersonalInformation",

"type": "record",

"name": "com.michalklempa.avro.schemas.employee.EmployeeValue",

"outputFileSuffix": "-value",

"fields": [

{

"doc": "Lets repeat the key of this record, even if the record is outside Kafka topic, to retain the key of Employee",

"name": "key",

"type": {

"doc": "This is an example of composite Avro key prepared for Kafka",

"type": "record",

"name": "com.michalklempa.avro.schemas.employee.EmployeeKey",

"outputFileSuffix": "-key",

"fields": [

{

"doc": "Employee id with regard to the company",

"name": "id",

"type": "long"

}

]

}

},

{

"doc": "Employee Personal Information (which includes Location as a subtype)",

"name": "personalInformation",

"type": "com.michalklempa.avro.schemas.simple.PersonalInformation" <<<--- Type named, but no declaration in this file

}

]

}

Depending on the particular ordering of files when parsing them, we may encounter exceptions from Schema$Parser class, not knowing the types:

org.apache.avro.SchemaParseException: "com.michalklempa.avro.schemas.simple.PersonalInformation" is not a defined name. The type of the "personalInformation" field must be a defined name or a {"type": ...} expression.

at org.apache.avro.Schema.parse(Schema.java:1635)

at org.apache.avro.Schema$Parser.parse(Schema.java:1394)

at org.apache.avro.Schema$Parser.parse(Schema.java:1365)

Avro Compose to the rescue

We have prepared a utility Avro Compose which can be used to parse and compose these schemas without concers about the input schema ordering.

Download the jar file:

mvn dependency:copy -Dartifact=com.michalklempa:avro-compose:0.0.1 -DoutputDirectory=.

Now we can compose these schemes automatically:

java -jar avro-compose-0.0.1-shaded.jar --output.schemas.directory ./generated/ ./schemas/

Looking into the output directory, we can see the outputted schemas:

generated/

├── com.michalklempa.avro.schemas.employee.EmployeeKey.avsc

├── com.michalklempa.avro.schemas.employee.EmployeeValue.avsc

├── com.michalklempa.avro.schemas.simple.Address.avsc

└── com.michalklempa.avro.schemas.simple.PersonalInformation.avsc

The EmployeeValue contains PersonalInformation and Address embedded:

{

"type" : "record",

"name" : "EmployeeValue",

"namespace" : "com.michalklempa.avro.schemas.employee",

"doc" : "This is an example of composite Avro value prepared for Kafka topic value with subtype, reusing common subtypes, like Location and PersonalInformation",

"fields" : [ {

"name" : "key",

"type" : {

"type" : "record",

"name" : "EmployeeKey",

"doc" : "This is an example of composite Avro key prepared for Kafka",

"fields" : [ {

"name" : "id",

"type" : "long",

"doc" : "Employee id with regard to the company"

} ],

"outputFileSuffix" : "-key"

},

"doc" : "Lets repeat the key of this record, even if the record is outside Kafka topic, to retain the key of Employee"

}, {

"name" : "personalInformation",

"type" : {

"type" : "record",

"name" : "PersonalInformation",

"namespace" : "com.michalklempa.avro.schemas.simple",

"doc" : "PersonalInformation, including Location as a subtype",

"fields" : [ {

"name" : "address",

"type" : {

"type" : "record",

"name" : "Address",

"doc" : "Address",

"fields" : [ {

"name" : "value",

"type" : {

"type" : "string",

"avro.java.string" : "String"

}

} ]

},

"doc" : "Address"

}, {

"name" : "name",

"type" : {

"type" : "string",

"avro.java.string" : "String"

}

}, {

"name" : "surname",

"type" : {

"type" : "string",

"avro.java.string" : "String"

}

} ]

},

"doc" : "Employee Personal Information (which includes Location as a subtype)"

} ],

"outputFileSuffix" : "-value"

}

Thats it. For more advanced examples, head to the Avro Compose github repository.

References

[1] Björn Beskow: Serialization, Schema Compositionality and Apache Avro